🤖 Setting Up a Raspberry Pi 4 Kubernetes Cluster

March 07, 2020

I’ve been wanting to set up a Raspberry Pi powered Kuberenetes cluster for quite some time and I finally found time to get it working. If you’re anything like me, you often find yourself tearing things apart and re-building them, so to make the chore of re-building the cluster easier, I’ve been logging my efforts in the form of Ansible playbooks. This post is mostly just a write-up of how I went about building the cluster, but you may find it useful if you’re building your own.

At the end of this process you will have a single-plane Kubernetes Cluster running on Raspberry Pis. My Cluster will consist of 4 nodes (1 control-plane node and 3 workers), but you can add more workers.

1. Get The Equipment

This is what I went for:

- 4 x Raspberry Pi 4 - I used the 4GB version

- 4 x Fast 32GB/64GB Micro SD Card - I used a SanDisk Extreme 32 GB microSDHC

- 1 x 8 Port Gigabit Switch - I used a NETGEAR 8-Port Gigabit Ethernet Unmanaged Switch

- 1 x Cases of your choice - I used a Stacked Case With Fans (minus the heatsinks)

- 4 x USB Type-C Power Adapter(s) - Ideally something with four outputs

2. Write an Operating System to the SD Cards

I chose an ARM64 build of Ubuntu Server (19.10 or 20.04 should both work) for my cluster, the primary reason for this is: it’s debian based (I like Debian) and an ARM64 build is available. If you opt for a different operating system, the Ansible scripts used in this post will need to be edited.

You can download images from the Ubuntu Raspberry Pi Download Page.

Write the image to your SD cards, if you don’t know how, Ubuntu provide instructions for applying the images to an SD card on their Thank You Page.

3. Prepare the Raspberry Pi Nodes

Before you can start to run the Ansible playbooks you need to set up a few things.

A. Enable SSH

- Insert the SD card into any computer and mount the first partition (named

boot) - Create an empty file in the boot partition with the name

ssh- This enables remote SSH login

B. Change the default password of the ubuntu user

If you have a display, keyboard and the appropriate cable, connect each Raspberry Pi to the display and connect the Keyboard. Alternatively, find the IP address that the Pi has been assigned (your home router’s web interface / DHCP server logs can help you find this) and connect via SSH.

Log in to each Pi with the username ubuntu and password ubuntu and follow the prompts to change the password, just use something temporary as you’ll be deleting this user in a moment.

C. Configure Fixed IP addresses

- Once you have changed the passwords, you will be logged out, log in again

-

Enter

cat /sys/class/net/eth0/addressand note down the MAC address of each Pi$ cat /sys/class/net/eth0/address de:ad:be:ef:ca:fe - On your home router or DHCP server, set up fixed addresses for each Raspberry Pi

- Restart each Pi to pick up the new IP addresses, you may also need to restart your home router / DHCP server

D. Install Python

One final step before we are ready to start issueing commands to the Raspberry Pis using Ansible is to install Python. This can be Python 2.7 or 3, here we are using Python 3.

- Log back in to each Pi

-

Install Python with the following:

$ sudo apt update && sudo apt install python3 -y - Log out of each node

4. Prepare Your Host

We now have a little preparation to complete on the device you’ll be running the Ansible playbooks from. These steps apply to Linux and Mac.

A. Create an SSH Key Pair

If you don’t already have an SSH key pair, use ssh-keygen to generate a new one.

Running ssh-keygen without any arguments will generate an RSA key pair, you may want to read the docs and switch to a different key type for additional security. Accept the default location and optionally enter a passphrase.

$ ssh-keygen

Generating public/private rsa key pair.

Enter file in which to save the key (/home/user/.ssh/id_rsa):

Created directory '/home/user/.ssh'.

Enter passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved in /home/user/.ssh/id_rsa

Your public key has been saved in /home/user/.ssh/id_rsa.pub

The key fingerprint is:

SHA256:abXLRkBBWL8F5HkhPnkvW8xxPpLSB9h7jqRZ/MhvEI8 user@penguin

The key's randomart image is:

+---[RSA 3072]----+

| +=o+ . |

| .. + =o. |

| . O.+o. .|

| + Bo=++ |

| S +..OO+.|

| . o .BEO..|

| +o.+.o |

| . .. |

| .. |

+----[SHA256]-----+B. Install Ansible

For instructions on installing Ansible, check-out Installing Ansible.

Once installed run Ansible with the version flag to confirm it has been installed correctly:

$ ansible --version

ansible 2.9.4

config file = /etc/ansible/ansible.cfg

configured module search path = ['/home/user/.ansible/plugins/modules', '/usr/share/ansible/plugins/modules']

ansible python module location = /usr/lib/python3/dist-packages/ansible

executable location = /usr/bin/ansible

python version = 3.7.6 (default, Jan 19 2020, 22:34:52) [GCC 9.2.1 20200117]C. Get the Ansible Playbooks and Configure the Hosts file

- Clone the

ansible-raspberry-pi-kubernetesrepository

$ git clone https://github.com/aporcupine/ansible-raspberry-pi-kubernetes- Edit the hosts file with your IP addresses and preferred hostnames. Enter one host under the control-plane section, this will be your ‘master’ node, whilst the others will be ‘workers’.

5. Create Your New User Account

In this step we’ll set up a new user, copy over a public key, and finally remove the ubuntu user.

A. Run user_setup/stage1

This stage will prompt you to enter the username and password for your new user, it will then create that user, and add your public key to it’s authorized keys file.

I’d recommend setting the username to the same as the user that you’re currently logged in to, otherwise you’ll need to add -u followed by the username to all subsequent Ansible calls.

From the directory that you cloned the ansible-raspberry-pi-kubernetes repo into, run the following and when prompted enter the password you set in step 3:

$ ansible-playbook user_setup/stage1.yaml -i hosts \

--ask-become-pass --ask-pass -u ubuntuSSH password:

BECOME password[defaults to SSH password]:

Ansible command: `--ask-become-pass --ask-pass -u ubuntu`

Logged in, changed the password and installed Python (yes/no)?: yes

Enter username for new user: tom

Enter password for new user:

confirm Enter password for new user:

PLAY [all] *****************************************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************************************

ok: [10.42.1.200]

ok: [10.42.1.202]

ok: [10.42.1.201]

ok: [10.42.1.203]

TASK [Add the user "tom" with a bash shell, appending the group 'sudo' to the user's groups] *******************************************

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

changed: [10.42.1.200]

TASK [Set authorized key taken from file] **********************************************************************************************

changed: [10.42.1.203]

changed: [10.42.1.202]

changed: [10.42.1.200]

changed: [10.42.1.201]

PLAY RECAP *****************************************************************************************************************************

10.42.1.200 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.201 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.202 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.203 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0B. Run user_setup/stage2

This step will kill any processes running under the default ubuntu user, and will then delete it.

From the directory that you cloned the ansible-raspberry-pi-kubernetes repo into, run the ansible command below. From now on when prompted for the BECOME password enter the password configured previously.

$ ansible-playbook user_setup/stage2.yaml -i hosts --ask-become-passBECOME password:

PLAY [all] *****************************************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************************************

ok: [10.42.1.200]

ok: [10.42.1.203]

ok: [10.42.1.202]

ok: [10.42.1.201]

TASK [kill everything running under user ubuntu] ***************************************************************************************

changed: [10.42.1.201]

fatal: [10.42.1.200]: FAILED! => {"changed": true, "cmd": "killall -u ubuntu", "delta": "0:00:00.018396", "end": "2020-03-09 20:12:13.312472", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:12:13.294076", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

fatal: [10.42.1.202]: FAILED! => {"changed": true, "cmd": "killall -u ubuntu", "delta": "0:00:00.026985", "end": "2020-03-09 20:12:13.439785", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:12:13.412800", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

fatal: [10.42.1.203]: FAILED! => {"changed": true, "cmd": "killall -u ubuntu", "delta": "0:00:00.027249", "end": "2020-03-09 20:12:13.564656", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:12:13.537407", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

TASK [remove the user 'ubuntu'] ********************************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.203]

changed: [10.42.1.201]

changed: [10.42.1.202]

PLAY RECAP *****************************************************************************************************************************

10.42.1.200 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=1

10.42.1.201 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.202 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=1

10.42.1.203 : ok=3 changed=2 unreachable=0 failed=0 skipped=0 rescued=0 ignored=1 6. Configure Nodes and Install Docker and Kubernetes

In this step we’ll do some node configuration (including setting the hostname) and then install Docker, Kubectl, Kublet and Kubeadm.

From the directory that you cloned the ansible-raspberry-pi-kubernetes repo into, run the following:

$ ansible-playbook configure_hosts/configure_hosts.yaml -i hosts --ask-become-passBECOME password:

PLAY [all] *****************************************************************************************************************************

TASK [Gathering Facts] *****************************************************************************************************************

ok: [10.42.1.203]

ok: [10.42.1.202]

ok: [10.42.1.201]

ok: [10.42.1.200]

TASK [update all packages to the latest version (this may take some time)] *************************************************************

changed: [10.42.1.201]

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.200]

TASK [set hostnames using inventory new_hostname var] **********************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

TASK [test for cgroup_enable=memory] ***************************************************************************************************

fatal: [10.42.1.201]: FAILED! => {"changed": true, "cmd": ["grep", "cgroup_enable=memory", "/boot/firmware/nobtcmd.txt"], "delta": "0:00:00.010532", "end": "2020-03-09 20:24:47.515164", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:24:47.504632", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

fatal: [10.42.1.202]: FAILED! => {"changed": true, "cmd": ["grep", "cgroup_enable=memory", "/boot/firmware/nobtcmd.txt"], "delta": "0:00:00.010741", "end": "2020-03-09 20:24:47.515771", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:24:47.505030", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

fatal: [10.42.1.203]: FAILED! => {"changed": true, "cmd": ["grep", "cgroup_enable=memory", "/boot/firmware/nobtcmd.txt"], "delta": "0:00:00.010629", "end": "2020-03-09 20:24:47.523433", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:24:47.512804", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

fatal: [10.42.1.200]: FAILED! => {"changed": true, "cmd": ["grep", "cgroup_enable=memory", "/boot/firmware/nobtcmd.txt"], "delta": "0:00:00.008777", "end": "2020-03-09 20:24:47.492507", "msg": "non-zero return code", "rc": 1, "start": "2020-03-09 20:24:47.483730", "stderr": "", "stderr_lines": [], "stdout": "", "stdout_lines": []}

...ignoring

TASK [add cgroup_enable=memory to nobtcmd.txt] *****************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.200]

changed: [10.42.1.203]

changed: [10.42.1.201]

TASK [add overlay to modules to load] **************************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

TASK [add br_netfilter to modules to load] *********************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [add br_netfilter to modules to load] *********************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [reboot the servers to apply cgroup change] ***************************************************************************************

changed: [10.42.1.203]

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.200]

TASK [install software-properties-common] **********************************************************************************************

ok: [10.42.1.200]

ok: [10.42.1.202]

ok: [10.42.1.203]

ok: [10.42.1.201]

TASK [add docker-ce key] ***************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

changed: [10.42.1.200]

TASK [add docker-ce repo] **************************************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.202]

changed: [10.42.1.200]

TASK [install docker-ce] ***************************************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.202]

changed: [10.42.1.200]

TASK [Hold containerd.io] **************************************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [Hold docker-ce] ******************************************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.200]

TASK [Hold docker-ce-cli] **************************************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.200]

TASK [create docker.service.d dir] *****************************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.202]

TASK [restart docker] ******************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.200]

TASK [update apt cache] ****************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

changed: [10.42.1.200]

TASK [install arptables] ***************************************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.202]

changed: [10.42.1.200]

TASK [install ebtables] ****************************************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.201]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [ensure we're not using nftables (1)] *********************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.200]

changed: [10.42.1.203]

TASK [ensure we're not using nftables (2)] *********************************************************************************************

changed: [10.42.1.201]

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [ensure we're not using nftables (3)] *********************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.201]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [ensure we're not using nftables (4)] *********************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.201]

changed: [10.42.1.202]

changed: [10.42.1.203]

TASK [add k8s apt-key] *****************************************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

TASK [update apt cache] ****************************************************************************************************************

changed: [10.42.1.200]

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.203]

TASK [install k8s] *********************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.200]

TASK [Hold kubectl] ********************************************************************************************************************

changed: [10.42.1.203]

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.200]

TASK [Hold kubelet] ********************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.201]

changed: [10.42.1.203]

changed: [10.42.1.200]

TASK [Hold kubeadm] ********************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.200]

changed: [10.42.1.201]

changed: [10.42.1.203]

PLAY RECAP *****************************************************************************************************************************

10.42.1.200 : ok=30 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

10.42.1.201 : ok=30 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

10.42.1.202 : ok=30 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=0

10.42.1.203 : ok=30 changed=15 unreachable=0 failed=0 skipped=1 rescued=0 ignored=07. Initiate the Cluster

Our last step is to initiate the cluster, this step runs kubeadm init on the control-plane node, installs a network add-on, gets the join command from the control-plane node and runs it on all the other nodes.

In this step you’ll be asked whether you want to scheduled pods on your control-plane node, by default this is disabled, but it is recommended for small clusters.

From the directory that you cloned the ansible-raspberry-pi-kubernetes repo into, run the following:

$ ansible-playbook init_cluster/init_cluster.yaml -i hosts --ask-become-passBECOME password:

Would you like to schedule pods on your control-plane node - recommended for small clusters (yes/no)?: yes

PLAY [controlplane] ***********************************************************************************************************************************************************************

TASK [Gathering Facts] ********************************************************************************************************************************************************************

ok: [10.42.1.200]

TASK [initialize the cluster] *************************************************************************************************************************************************************

changed: [10.42.1.200]

TASK [create .kube directory] *************************************************************************************************************************************************************

changed: [10.42.1.200]

TASK [copy admin.conf to user's kube config] **********************************************************************************************************************************************

changed: [10.42.1.200]

TASK [enable ability to schedule pods on the control-plane node (master)] *****************************************************************************************************************

changed: [10.42.1.200]

TASK [install pod network add-on] *********************************************************************************************************************************************************

changed: [10.42.1.200]

TASK [get join command] *******************************************************************************************************************************************************************

changed: [10.42.1.200]

TASK [set join command] *******************************************************************************************************************************************************************

ok: [10.42.1.200]

PLAY [workers] ****************************************************************************************************************************************************************************

TASK [Gathering Facts] ********************************************************************************************************************************************************************

ok: [10.42.1.201]

ok: [10.42.1.203]

ok: [10.42.1.202]

TASK [join workers to cluster] ************************************************************************************************************************************************************

changed: [10.42.1.202]

changed: [10.42.1.203]

changed: [10.42.1.201]

PLAY RECAP ********************************************************************************************************************************************************************************

10.42.1.200 : ok=8 changed=6 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.201 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.202 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=0

10.42.1.203 : ok=2 changed=1 unreachable=0 failed=0 skipped=0 rescued=0 ignored=08. Give it a test!

Log into your control-plane node, and run the following:

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

cl0 Ready master 102m v1.17.3

cl1 Ready <none> 101m v1.17.3

cl2 Ready <none> 101m v1.17.3

cl3 Ready <none> 101m v1.17.3You should see each each of your nodes ready to go as shown, but it may take a few minutes until all nodes are ready.

Lets run a quick nginx deployment. Whilst logged into the control-plane node run the following to create the nginx.yaml file:

cat <<EOF >nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 4

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx

ports:

- containerPort: 80

EOFApply the deployment:

$ kubectl apply -f nginx.yaml

deployment.apps/nginx createdExpose the deployment:

$ kubectl expose deployment/nginx --type=NodePort

service/nginx exposedCheck that the pods are running, you should see 4 listed as available:

$ kubectl get deployment/nginx

NAME READY UP-TO-DATE AVAILABLE AGE

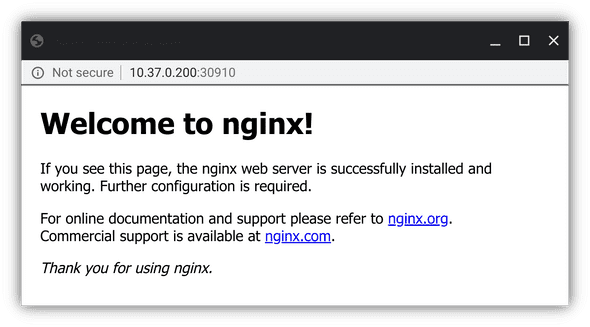

nginx 4/4 4 4 35sFind out the port that nginx has been exposed on, in the example below it is 30910:

$ kubectl get service/nginx

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

nginx NodePort 10.108.201.143 <none> 80:30910/TCP 2m6sOpen a browser and enter the IP address of any of your nodes with the port provided, in our case http://10.42.1.200:30910 works. You should see the nginx welcome page.

Congratulations! You now have a Rapsberry Pi Kubernetes Cluster!